This is an interesting article because in a typical Schrodinger way, I both agree and disagree.

Yes, big tech calling for a pause on AI development is self serving. This is unavoidable. But it doesn't make the concern unfounded. It is in fact quite legitimate although unfortunately much too late and unlikely to bring any respite.

The problem is that we now have extremely performant tools to develop AI that give powerful results although we do not exactly understand what is going on inside the black boxes. The consequence is that AI is advancing by leaps and bounds while our understanding of it is crawling far behind without any hope to catch up anytime soon.

(If you really want to understand why we cannot get the "alignment" right, you can listen to the very interesting interview of Eliezer Yudkowsky below. Although be warned that there are two drawbacks to this video: It is too long at over 3h and the interviewer is not cognizant of the subject and consequently has difficulty to grasp the technical concepts being explained.)

Authored by Mark Jeftovic via BombThrower.com,

Disruption for thee, but not for me…

For years, central banks have been sounding the alarm on Bitcoin and cryptocurrencies, fear mongering on the threats that they posed to the financial system, the global climate – at one point predicting that Bitcoin’s electricity demand would literally consume all available energy in the world by 2020 .

(Fact check: it didn’t)

Nevermind that under the stewardship of the central banks, the financial system has lurched from one crisis to the next for decades on end, every one an order of magnitude worse than the prior.

The policy response to each crisis (expand credit, suppress interest rates, print money) simply incentivized moral hazard, perverse incentives, and an ever widening wealth gap – while providing the setup for the next crisis. With this unbroken string of failures under their belts, it’s always rich to listen to these insular technocrats, who have zero skin in the game, pontificating about the meltdowns they created. Especially when they get worked up around hazards of a phenomenon that has since emerged to obsolete them.

Now it’s Big Tech’s turn:

Warning us about the existential “risks to society” of AI and calling for a time-out. The very people who have benefitted the most from exploiting disruptive technology, regardless of the collateral damage, and in the process became literally the wealthiest people in history, now look at AI in the hands of the rabble, and there’s a flag on the play.

When these tech oligarchs blow out entire industries and replace them with quasi-monopolies in which they’re majority shareholders, it’s just creative destruction. #LearnToCode, baby.

But if a total game changer suddenly finds its way into the hands of the plebs, and the Big Tech incumbents realize it could be their turn to get their asses disrupted… by commoners at that…. then suddenly we’re looking at a crime against humanity.

The Future of Life open letter was signed by everybody from Elon (“self-driving robotaxis any minute now”) Musk, and Steve Wozniak to Yuval (“the plebs are hackable animals”) Harari and sundry other AI incumbents.

According to their About Page,

The Future of Life Institute is an independent non-profit organisation funded by a range of individuals and organisations who share our desire to reduce global catastrophic and existential risk from powerful technologies.

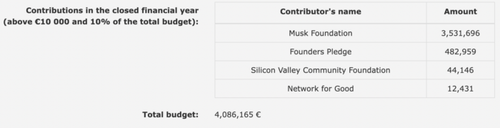

And their listing in their entry in the European Transparency registry discloses that their funding:

..is about 87% provided by the Musk Foundation. Speaking of risks from powerful technologies, let’s remember that 19 people have been killed by Tesla’s in self-driving mode and another 21 when their Tesla’s exploded as a result of various crashes (out of a total known pool of 373 Tesla deaths thus far).

Who is really threatened by AI?

When I first started playing with chatGPT, I never for a second believed it was any kind of magical artificial intelligence. I’ve always called AI “Algorithmic Imitation”.

However, it is a quantum leap forward in natural language interface. I instantly recognized who was most at risk by this: the search engines, namely Google, and the entire ecosystem of made-for-Adsense “content” websites whose raison d’être is to game search algos.

Hence, Google’s panicked ham-fisted rush job on Bard, and Microsoft’s debacle trying to incorporate chatGPT (in which they are a significant shareholder) into their own Bing. (Soon I’ll be releasing a serialized collection of pieces, working title “Infernal Algorithmica” which is a technological grimoire exploring the lower circles of the pay-per-click advertising model. Sign up for the list if you want that when it comes out.).

This is nothing new. This is the same dynamic that’s been played out with every disruptive tech innovation, ever – there are even accounts from the Decline of The Roman Empire on how the alchemist who discovered aluminum was beheaded when the Emperor suspected that this breakthrough innovation might devalue silver (the story was described by the Roman writer Petronius, circa 27AD, in his novel, Satyricon, although Pliny The Elder wrote that it could have been apocryphal).

Apocryphal or not, the tension between disruptive, rising technologies, and the elites who control the operations and markets of the incumbent ones is real and perennial.

In “Innovation and its Enemies, Why People Resist New Technologies“, Calestous Juma writes how

“[Nearly all] debates over new technologies are framed in context of risks to moral values, human health and environmental safety. But behind these concerns often lie deeper, but unacknowledged, socioeconomic considerations”.

The face-off between the established technological order and new aspirants leads to controversies…perceptions about immediate risks and long-term distribution of benefits influence the intensity of concerns over new technologies”.

These calls for a moratorium on AI, the abolition or over-regulation of cryptos and Bitcoin and inevitable calls for technocratic control over your energy consumption and individual carbon footprint metering are all riffing on the same theme: you’re too stupid and infantile to use these technologies responsibly. Only The State can figure it out. (No wonder most governments of the world are on a mission to ban cash, privacy …and guns).

People like Musk, Harari and the ever increasingly batshit Eliezer Yudkowsky (who wants to forcefully curtail computing power to the point of advocating for the military bombardment of rogue data centers abroad) should know this.

Yudkowsky has been mentioned in these pages before. He’s the one who is convinced AI will inevitably result in the extermination of all humanity.

I had a lawyer who liked to say “You can’t suck and blow at the same time”. Either you want to ride a tide of rapid technological change to unparalleled living standards, personal wealth and privilege (but which affords everybody else those same opportunities), or not so much. You can’t do both.

No comments:

Post a Comment