Interesting idea but too late! Competition guaranties that someone will develop a AI with military applications. It is unavoidable. This is how arm races work. We can't even negotiate a truce in Ukraine. How could anyone renounce a weapon which will give a significant advantage?

Musk, Wozniak Call For Pause In Developing 'More Powerful' AI Than GPT-4

Elon Musk, Steve Wozniak, AI pioneer Yoshua Bengio and others have signed an open letter calling for a six-month pause in developing new AI tools more powerful than GPT-4, the technology released earlier this month by Microsoft-backed startup OpenAI, the Wall Street Journal reports.

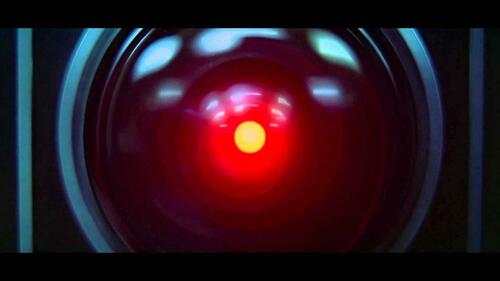

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. -futureoflife.org

"We’ve reached the point where these systems are smart enough that they can be used in ways that are dangerous for society," said Bengio, director of the University of Montreal’s Montreal Institute for Learning Algorithms, adding "And we don't yet understand."

Their concerns were laid out in a letter titled "Pause Giant AI Experiments: An Open Letter," which was spearheaded by the Future of Life Institute - a nonprofit advised by Musk.

The letter doesn’t call for all AI development to halt, but urges companies to temporarily stop training systems more powerful than GPT-4, the technology released this month by Microsoft Corp.-backed startup OpenAI. That includes the next generation of OpenAI’s technology, GPT-5.

OpenAI officials say they haven’t started training GPT-5. In an interview, OpenAI CEO Sam Altman said the company has long given priority to safety in development and spent more than six months doing safety tests on GPT-4 before its launch. -WSJ

"In some sense, this is preaching to the choir," said Altman. "We have, I think, been talking about these issues the loudest, with the most intensity, for the longest."

Goldman, meanwhile, says that up to 300 million jobs could be replaced with AI, as "two thirds of occupations could be partially automated by AI."

So-called generative AI creates original content based on human prompts - a technology which has already been implemented in Microsoft's Bing search engine and other tools. Soon after, Google deployed a rival called Bard. Other companies, including Adobe, Salesforce and Zoom have all introduced advanced AI tools.

"A race starts today," said Microsoft CEO Satya Nadella in comments last month. "We’re going to move, and move fast."

One of the letter's organizers, Max Tegmark who heads up the Future of Life Institute and is a physics professor at the Massachusetts Institute of Technology, calls it a "suicide race."

"It is unfortunate to frame this as an arms race," he said. "It is more of a suicide race. It doesn’t matter who is going to get there first. It just means that humanity as a whole could lose control of its own destiny."

The Future of Life Institute started working on the letter last week and initially allowed anybody to sign without identity verification. At one point, Mr. Altman’s name was added to the letter, but later removed. Mr. Altman said he never signed the letter. He said the company frequently coordinates with other AI companies on safety standards and to discuss broader concerns.

“There is work that we don’t do because we don’t think we yet know how to make it sufficiently safe,” he said. “So yeah, I think there are ways that you can slow down on multiple axes and that’s important. And it is part of our strategy.” -WSJ

Musk - an early founder and financial backer of OpenAI, and Wozniak, have been outspoken about the dangers of AI for a while.

"There are serious AI risk issues," he tweeted.

Meta's chief AI scientist, Yann LeCun, didn't sign the open letter because he says he disagrees with its premise (without elaborating).

Of course, some are already speculating that the signatories may have ulterior motives.

No comments:

Post a Comment