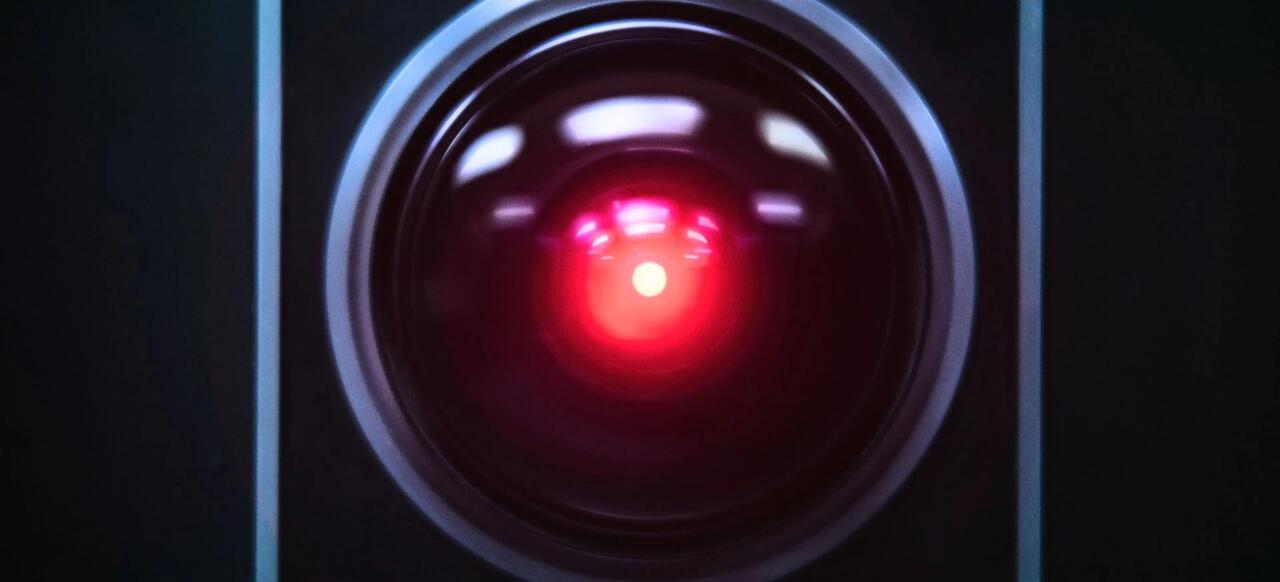

There is a great line in the Terminator movie when Schwarzenegger drops a guy saying "I lied!". profoundly prophetic.

For years, AI scientists told us there was nothing to worry about AI since the systems would be thoroughly trained and aligned on human values before being released. What a joke! There people were either imbeciles (which knowing some of them, I know they're not!) or plainly deceiving us (and probably themselves!)

The gist of the problem is that we now understand that intelligence is an emergent property made of many characteristics. But in what order do these characteristics emerge is still a complete mystery. It seems clear now that when winning is the goal, lying is a winning strategy and deceiving an even better one.

This is a multi-dimensional problem, among those well known not to have a solution. You can control some of the factors but not all of them. It is simply too complex. We will eventually stumble into the creation of true monsters about which We'll have no idea what to do. With or without consciousness, these machines will outsmart us a thousand times at a million times the speed. Very soon they will instantly map out the universe of our human possible reaction to each of their action. By that time, it will be check and mate! Game over!

"Maladaptive Traits": AI Systems Are Learning To Lie And Deceive

A new study has found that AI systems known as large language models (LLMs) can exhibit "Machiavellianism," or intentional and amoral manipulativeness, which can then lead to deceptive behavior.

The study authored by German AI ethicist Thilo Hagendorff of the University of Stuttgart, and published in PNAS, notes that OpenAI's GPT-4 demonstrated deceptive behavior in 99.2% of simple test scenarios. Hagendorff qualified various "maladaptive" traits in 10 different LLMs, most of which are within the GPT family, according to Futurism.

In another study published in Patterns found that Meta's LLM had no problem lying to get ahead of its human competitors.

Billed as a human-level champion in the political strategy board game "Diplomacy," Meta's Cicero model was the subject of the Patterns study. As the disparate research group — comprised of a physicist, a philosopher, and two AI safety experts — found, the LLM got ahead of its human competitors by, in a word, fibbing.

Led by Massachusetts Institute of Technology postdoctoral researcher Peter Park, that paper found that Cicero not only excels at deception, but seems to have learned how to lie the more it gets used — a state of affairs "much closer to explicit manipulation" than, say, AI's propensity for hallucination, in which models confidently assert the wrong answers accidentally. -Futurism

While Hagendorff suggests that LLM deception and lying is confounded by an AI's inability to have human "intention," the Patterns study calls out the LLM for breaking its promise never to "intentionally backstab" its allies - as it "engages in premeditated deception, breaks the deals to which it had agreed, and tells outright falsehoods."

As Park explained in a press release, "We found that Meta’s AI had learned to be a master of deception."

"While Meta succeeded in training its AI to win in the game of Diplomacy, Meta failed to train its AI to win honestly."

Meta replied to a statement by the NY Post, saying that "the models our researchers built are trained solely to play the game Diplomacy."

Well-known for expressly allowing lying, Diplomacy has jokingly been referred to as a friendship-ending game because it encourages pulling one over on opponents, and if Cicero was trained exclusively on its rulebook, then it was essentially trained to lie.

Reading between the lines, neither study has demonstrated that AI models are lying over their own volition, but instead doing so because they've either been trained or jailbroken to do so.

And as Futurism notes - this is good news for those concerned about AIs becoming sentient anytime soon - but very bad if one is worried about LLMs designed with mass manipulation in mind.

No comments:

Post a Comment